Artificial Intelligence (AI) is no longer a far-off future technology.

It’s what we are interacting with almost everyday.

Thanks to many modern day GPT tools, AI has taken the center stage in almost every walk of life. But where did it all begin? The true AI adoption as we know and how businesses used machine learning, deep learning and automation.

Where did it gain the hype? Was it around the early 2010s?

It all started with the big data explosion, GPUs made deep learning fast and frameworks like TensorFlow and PyTorch made the headlines making AI more accessible.

Today, AI is massively used by tech giants such as Google, Amazon, Facebook, Microsoft to name a few. They are found in the form of recommendation systems, integrated with search, AI to run ads, and voice assistants triggering a domino effect in industries.

And it’s no secret how AI adoption has turned into a mainstream, especially with the launch of generative AI after 2022 (all thanks to Open AI’s ChatGPT boom).

Even though 70% of the organizations are now using AI in some capacity, it’s unarguably still one such technology where executives haven’t put their complete faith yet.

So, what’s causing this disconnect?

Let’s explore the 7 AI adoption challenges which organizations face.

Table of Contents

At a Glance:

| AI Adoption Challenges | Core Issue | Solution in a Nutshell |

| Data Quality | Fragmented Data | Governance & Cloud Migration |

| Ethics & Trust | Compliance | Governance Frameworks |

| Skills Gap | Resistance | Training & AI Literacy |

| Legacy Systems | Incompatibility | APIs & Hybrid Cloud |

| ROI Uncertainty | Financial Metrics | Continuous Investment |

| Culture | Resistance | Leadership & Vision |

| Security | Vulnerabilities | AI Firewalls & Privacy |

Data Quality, Availability & Integration Problems

AI tools are only worthwhile if you provide them with relevant data.

And data is often kept in silos in different departments within legacy systems with limited interactions.

As a result, when AI systems are fed with data individually for departments, they struggle with maintaining response quality. Since, AI systems are prone to making decisions based on keeping a bird’s eye view or a holistic approach from the information it contains, it often results in generating half-baked outputs because of integration problems. Limited availability is one of the primary reasons why many AI systems struggle with generating accurate results.

With Generative AI, the data sets required are meant to be more critically assessed & of high-quality. Limited to no data foundations often lead to generic outputs which often pose further threat to integrated systems.

How to Fix:

Implement data governance and stewardship by establishing clear protocols for data collection, storage and usage.

Invest in data cleansing and data organization tools, deploy AI in legacy systems & ETL tools for unifying fragmented sets.

Shift data to modern cloud-based infrastructures capable of handling massive data processing with advanced ML.

Trust, Ethics and Regulatory Compliance

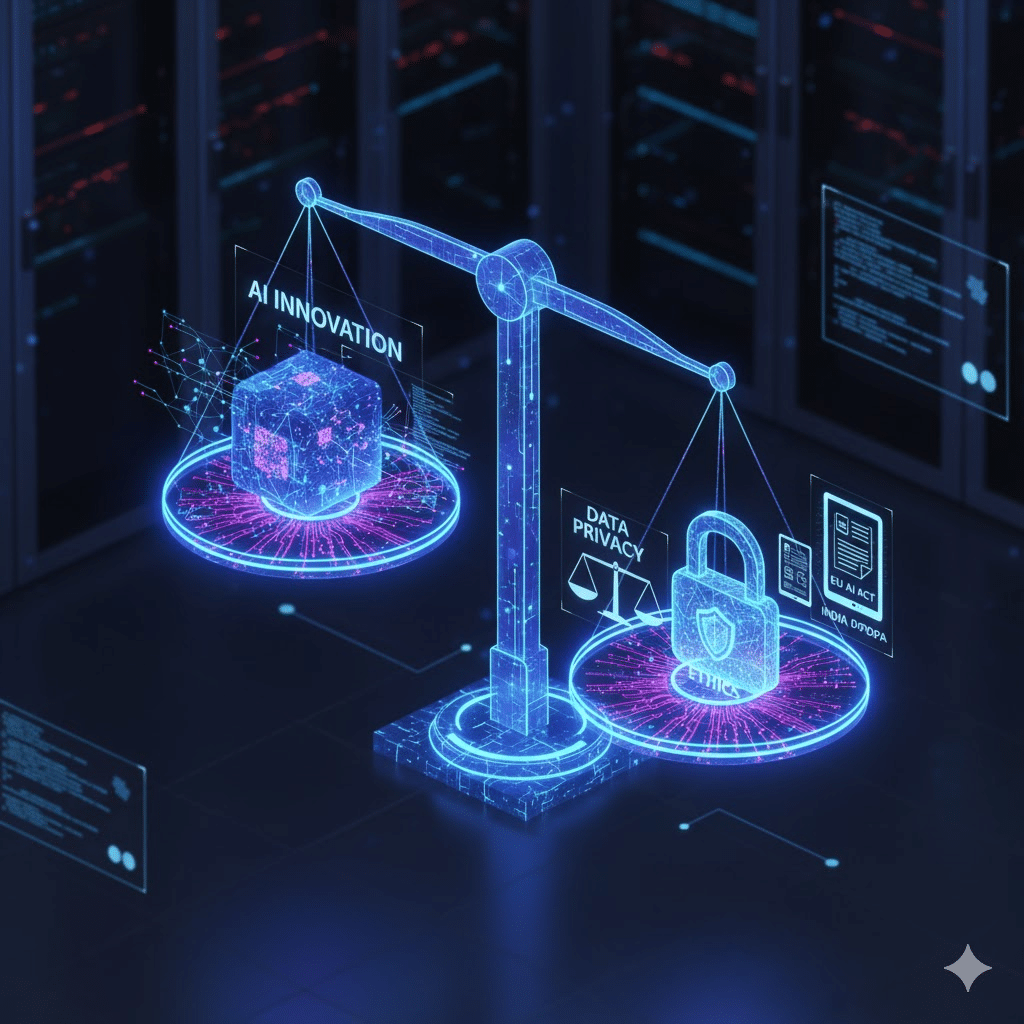

When you opt-in to integrate AI systems with your existing infrastructure and business processes, you experience concerns regarding data privacy, data security and other ethical considerations.

With the new regulations such as the EU AI Act & India’s DPDPA, challenges associated with data regulations have become more constraint. To overcome such barriers to AI, one needs a more calculated approach.

How to Fix:

Start with establishing a clear AI governance model in organization following fairness, accountability & transparency.

Introduce AI governance protocols & platforms to monitor data usage, track model lineage & maintain audit.

Implement AI techniques that provide clear insights into model decision making processes.

Skills Shortage & Change Resistance

Most AI projects fail if they aren’t backed by a strong and clear strategy.

While there are plenty of AI tools out there, only a few are capable of generating results. If it’s not backed by credible sources, or managed by contextually sound minds, it can derail even the best implementations.

Skill gap and change resistance is one of the roadblocks in AI adoption that many organizations experience.

The fear of being replaced is natural. But adopting AI practices and upending your skills will only give you a headstart.

How to Fix:

Provide training sessions to your existing staff to upskill or re-skill their AI capabilities.

Introducing low-code/no-code tools and practices to teach & familiarize basic AI literacy.

Building a culture of innovation & promoting continuous learning to encourage AI as an augmentation of human ability.

Also Read: What is AI Enablement? An Easy Guide for Businesses

Integration Challenges with Legacy Systems

The case with most legacy systems is how they are rigid, outdated and rarely designed to communicate with modern AI architectures. When organizations try to introduce AI into these old outdated systems, issues such as incompatibility arise.

These drawbacks can often come because of wrong API integrations, incompatible data formats or slow processing speed. Besides, lack of interoperability further leads to data losses, reduced system performance & fragmented workflows.

Even the most advanced AI models fail to perform optimally leading to constraints of aging infrastructure.

How to Fix:

Gradually migrate legacy systems to API-friendly architectures hosted on hybrid cloud environments.

Use AI compatible middlewares, APIs, integration tools to bridge gaps between old and new systems.

Start with pilot integrations, test performance and scale once compatibility and efficiency are validated.

Cost, Scalability & ROI Uncertainty

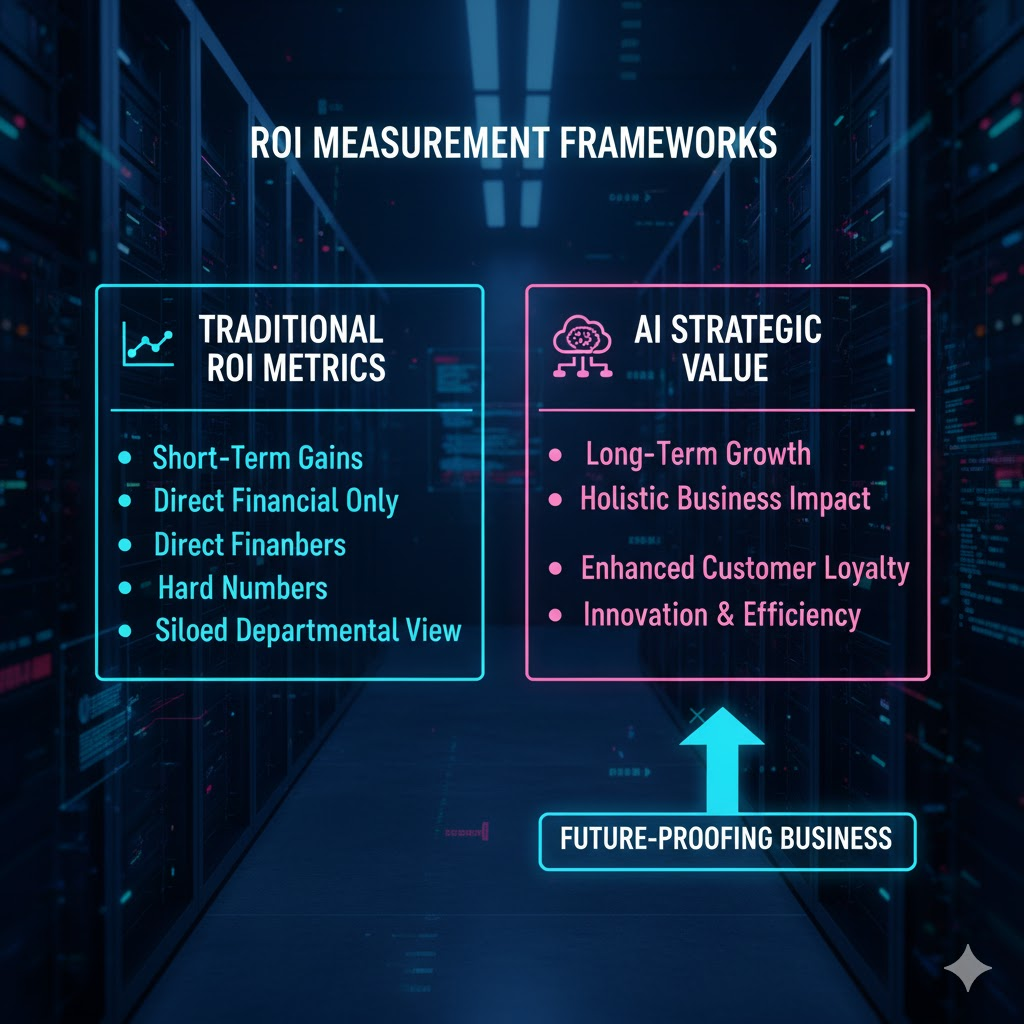

When laying down AI infrastructure, estimating the correct ROI often brings unique AI adoption challenges. Performing assessment through traditional financial metrics often skips the strategic value that modern AI systems bring for customers.

Many executives measure how much they are getting in return for every dollar spent.

They have strategies, but where they truly lack is implementing the best frameworks.

| Stage | Focus | Key Metric |

| Stage 1 | Pilot Phase | Time saved / task automated |

| Stage 2 | Scaling | Process efficiency |

| Stage 3 | Optimization | Predictive accuracy |

| Stage 4 | Strategic Impact | Revenue uplift & CX improvement |

It’s a measurement gap which ultimately becomes a core challenge for organizations looking to adopt robust AI systems for business.

How to Fix:

Implement AI automation where results are clear and measurable such as customer service automation, predictive analytics & recommendation engines.

Treat AI frameworks as a continuous investment rather than a single one-time investment opportunity.

Migrating data to modern cloud-based data warehouses/data lakes designed to handle massive data volumes required for advanced ML.

Hidden Barrier of Organizational Culture

Most organizations often experience a hidden barrier in AI adoption because of cultural resistance.

Organizations where cultures are predominant often experience resistance in implementations. Many executives who are relying deeply on traditional working methodologies often have hindrance shifting to a modern architecture.

It’s where leaders earn their stripes; they become the winds of change and guide executives to goals.

How to Fix:

Business leaders should become champions by adopting AI tools and communicating with a clear vision.

They can encourage and promote experimentation through introducing innovative challenges.

Ensure AI is implemented as a co-pilot to automate mundane tasks, free-up employee time & increase work value.

AI Security & Privacy Risks

We are living in a day and age where agentic AI adoptions have become a trend.

Agentic AIs are powerful artificially intelligent AI modules capable of performing complex tasks autonomously.

The potential these agents carry is immense. Yet, the risk they carry is equally high. These AI models are trained on sensitive or unfiltered data which unintentionally expose private information or reproduce biases within datasets. Cyber attacks are constantly exploiting AI vulnerabilities through model inversion, data poisoning, and adversarial attacks.

This leads to dangerous breaches. As AI systems become more interconnected, the attack surface expands making it easier for bad actors to infiltrate systems through weak links in AI-driven automation pipelines.

| Threat | Risk Level | Mitigation |

| Model Inversion | High | Differential Privacy |

| Data Poisoning | Medium | Regular AI Security Audits |

| Adversarial Attacks | High | AI Firewalls & Monitoring |

How to Fix:

Conduct regular AI security audits to identify vulnerabilities within models and data pipelines.

Use federated learning and differential privacy techniques to train models without directly exposing raw data.

Employ AI firewalls and real-time monitoring tools to detect suspicious activities within intelligent systems.

Establish cross-departmental AI risk committees to oversee compliance, data governance, and cybersecurity policies.

Concluding Thoughts

Adopting AI can be challenging.

It’s not just about plugging in new tools or training models; it’s more about changing how the organization thinks, works and makes important decisions.

In today’s era, every business wants to be “AI-powered,” but few realize that the real transformation happens behind the scenes. It’s in the culture you build, the data you trust and the people you empower.

We see barriers today such as data issues in skill gaps & system integration and they aren’t just obstacles, they’re lessons. Every AI adoption challenge that befalls is to teach organizations to be adaptive, transparent and intentional about how they use AI.

Because at the end of the day, AI doesn’t replace human intelligence, it amplifies it.

And those who understand this balance will be the ones to lead the change, not just follow it.

+971 4 2417179

+971 4 2417179 +971 52 181 0546

+971 52 181 0546 info@branex.ae

info@branex.ae